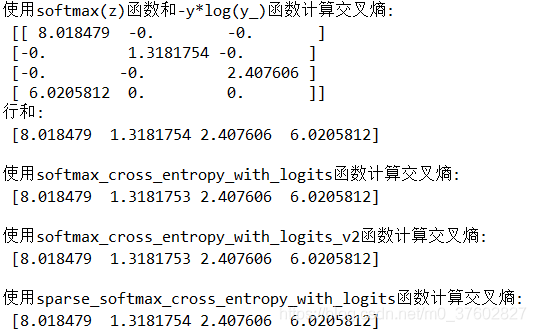

对于tf.nn.sparse_softmax_cross_entropy_with_logits (logits=y,labels=tf.argmax(y_,1))的研究_阿言在学习的博客-CSDN博客

python 3.x - tf.nn.sparse_softmax_cross_entropy_with_logits is not working properly and shape/rank error - Stack Overflow

tensorflow - what's the difference between softmax_cross_entropy_with_logits and losses.log_loss? - Stack Overflow

python - What are logits? What is the difference between softmax and softmax_cross_entropy_with_logits? - Stack Overflow

Lecture 5: ValueError: Only call `sparse_softmax_cross_entropy_with_logits` with named arguments (labels=..., logits=..., ...) · Issue #90 · pkmital/CADL · GitHub

ValueError: Only call `sparse_softmax_cross_entropy_with_logits` with named arguments" encountered in the training loop · Issue #15 · KGPML/Hyperspectral · GitHub

Alexis Sanders 🇺🇦 on Twitter: "Has anyone seen "Best Answer" (noticing: https://t.co/T6uHSGORtm, https://t.co/ddtG88Oqjq, & https://t.co/DdfS6678mK)? @aaranged @JarnoVanDriel https://t.co/jbsInUrzL2" / Twitter

python - In a two class issue with one-hot label, why tf.losses.softmax_cross_entropy outputs very large cost - Stack Overflow

bug report: shouldn't use tf.nn.sparse_softmax_cross_entropy_with_logits to calculate loss · Issue #3 · AntreasAntoniou/MatchingNetworks · GitHub

tf.nn.sparse_softmax_cross_entropy_with_logits & "ValueError: Rank mismatch: Rank of labels (received 2) should equal rank of logits minus 1 (received 2). · Issue #224 · aymericdamien/TensorFlow-Examples · GitHub